OMERO ADI Container

The OMERO Automated Data Import (ADI) service handles automated data import workflows and order management.

Overview

OMERO ADI provides:

Automated Import: Hands-free data import from configured directories

Order Management: Import job queuing and tracking

File Monitoring: Automated detection of new data files

Integration: Seamless connection with OMERO server and web interface

See the OMERO ADI README for detailed setup, configuration, and usage.

Import Workflow

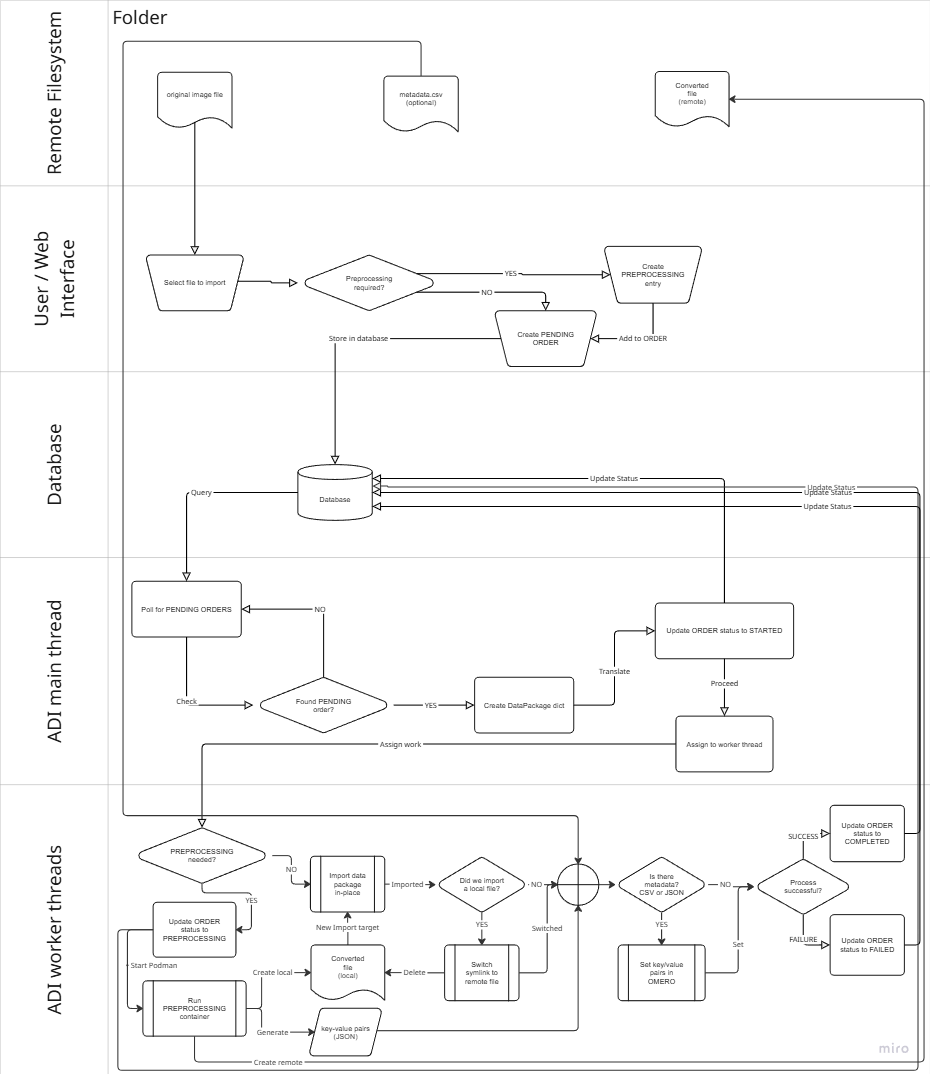

High-level flow of the OMERO ADI import process, from user request and database orchestration to worker thread execution.

Import order creation and management

Orders are managed in PostgreSQL. Tables are created by ADI via SQLAlchemy at startup, using the database configured by INGEST_TRACKING_DB_URL (env var preferred) or in settings.yml (see below).

Ways to create orders:

OMERO.biomero web plugin: Uses the

omero_adiPython library to insert into both the main imports table and the preprocessing table when needed.Direct SQL: Insert directly into the database (see the OMERO ADI README for schema details).

Programmatically: Use the

omero_adiPython library from any client.

Database access:

See Database Containers for information on how to access the PostgreSQL database.

You can also browse tables in Metabase. See Metabase Container. Note: Metabase browsing is read-focused; creating new orders is typically done via OMERO.biomero or direct SQL.

File system monitoring and processing

The shared file system is mounted into omeroadi at

/data. The ADI user must have read/write permissions.Orders can reference any files/folders under this mount. The OMERO.biomero app can restrict selectable folders per group in its UI; the database itself does not enforce these restrictions.

When preprocessing is enabled, converted files are written back alongside originals under a

.processedsubfolder within the same directory.All imports are in-place. OMERO.server must mount the same storage at the same path for symlink-based imports to work.

For large/long imports, enable preprocessing: after preprocessing ADI imports from local temporary storage on OMERO.server, then redirects symlinks to the network location afterward. This reduces network risk during in-place import.

Configuration

settings.ymlis read at startup. Prefer environment variables for secrets and URLs.Key options:

ingest_tracking_db(orINGEST_TRACKING_DB_URLvia env)log_level,log_file_pathmax_workersOMERO CLI import tuning per worker:

parallel_upload_per_worker,parallel_filesets_per_workerOMERO CLI import skips:

skip_all,skip_checksum,skip_minmax,skip_thumbnails,skip_upgrade

You can bind-mount a customized

settings.ymlinto the container to override defaults.Some settings can be tuned for performance depending on your storage and data shape.

Integration with OMERO server

The omeroadi container shares both

/OMEROand/datawith OMERO.server. This is required for preprocessing and in-place imports.ADI authenticates to OMERO as root initially, then switches context to the requesting user/group to perform the import as that user.

Custom import pipeline development

See the OMERO ADI README for container interfaces and examples.

Provide a standalone Docker/Podman-compatible container that follows the IO conventions (inputs/outputs and optional JSON metadata). ADI will run it via podman-in-docker/podman-in-podman.

Windows note: preprocessing containers should run as root inside the container to avoid file permission issues on mounted volumes. On Linux, userns keep-id can help for non-root, but Windows Docker commonly needs root.

Files without preprocessing are imported in-place using OMERO CLI/bioformats via ezomero/CLI integration.

Error handling and retry mechanisms

Logs: application and import logs are written in the container (default:

/auto-importer/logs). Each import also has dedicated OMERO CLI logs.Failed imports: orders are marked as FAILED and are not retried automatically. You can set them back to PENDING to re-run.

Example to retry a specific order by UUID:

UPDATE imports

SET stage = 'Import Pending'

WHERE uuid = '00000000-0000-0000-0000-000000000000';

Testing

Quick checks for a running deployment:

Run a small end-to-end test order:

# ADI upload health check

podman exec -it omeroadi /bin/bash -c "python tests/t_main.py"

Test podman-in-podman:

# Podman-in-podman test run

podman exec -it omeroadi /bin/bash -c "podman run docker.io/godlovedc/lolcow"

See the OMERO ADI README for details on configuring settings.yml to point to your demo file and target destination.

Security and runtime requirements

Note

ADI runs podman-in-podman (or podman-in-docker). The container currently requires --privileged and access to /dev/fuse for running preprocessing containers as the ADI user. Some reports suggest alternatives may be possible on specific platforms, but our attempts without privileged have not been reliable yet. A potential future improvement is to switch the podman engine that runs ADI to a different model so the inner podman can run without privileged.